Why are so many intelligent people so wrong on so many issues?

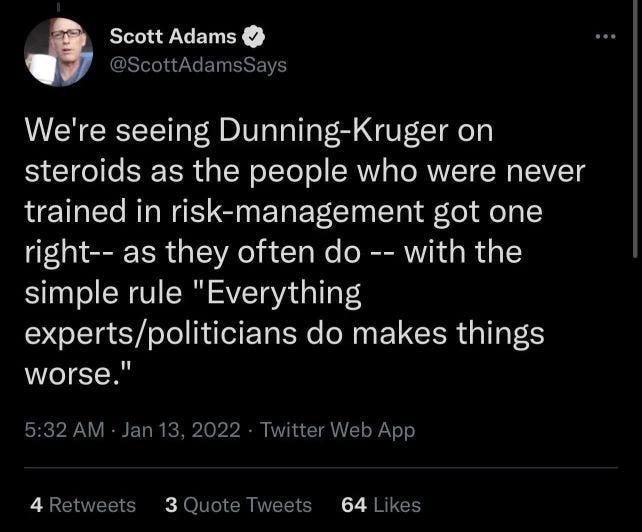

Recently, a rather amusing (but very important) fight broke out online, with people of perceived higher intelligence arguing with people of perceived lower intelligence, but with a firm knowledge in the function of trust, about something akin to the Dunning-Kruger effect:

Dunning-Kruger, of course, is the now world-famous IG-Nobel colloquial statement that people of low intelligence cannot fathom that they are of too low intelligence to argue with - a true statement that becomes an easy argument to throw around, as it cannot be refuted IN PRINCIPLE - but a dangerous one it is, too, as it can very well boomerang; for, in its very own inherent statement, intelligence is relative - and, furthermore, it quite often misses the point.

In this incident, the people, thus addressed, kindly kept stating it was a matter of trust; but an "objective" mind does not understand "trust", only "analysis", and so it will fall into the "fallacy of assumption" trap of assuming that all of its assumptions are right; but when they are not, all you get is GIGO - Garbage In, Garbage Out.

No matter how good your analysis is.

The trouble is, there is no way IN PRINCIPLE that you can tell if ALL of your assumptions are right - and one wrong assumption alone will throw any argument out of the window.

Which is why - at one point or another - you have to make a choice.

And what do you base an improvable assumption on? Trust.

Trust in your senses, your religion, your fellow man, expert analysis … whatever.

This circumstance, taken into account consciously, should make you very wary of deception.

For if, in your personal life, you catch someone lying to you once, you may forgive them; twice, and you understand it's a habit.

Three times, and you will never trust that person again - no matter what.

Or perhaps your senses, for that matter.

So, the German proverb

"Wer dreimal lügt, dem glaubt man nicht, und wenn er auch die Wahrheit spricht."

("Lie three times, us you won't sway - even when the truth you say.")

Serves as a warning both ways:

a) don't lie, and

b) don't believe a liar:

(b) is not safe, and with

(a) perhaps more than twice you will never be trusted again - no matter what.

Well - at least in your personal life.

Which begs the question:

Why do people continue to believe PUBLICLY proven purveyors of untruths?

Why do (even highly intelligent) people trust and believe the same experts, media talking heads, or newspaper outlets again and again, that have demonstrably been proven to be wrong, again and again - either on other subjects, or even on the same one?

Well, as it seems, this phenomenon (which, aptly so, is on the scientific level of Dunning-Kruger) has been labeled

'The Gell-Mann-Amnesia effect'

in honor of a scientist named Murray Gell-Mann.

It relates to media reception:

Those who are well versed, as specialists, in any specific area, usually find any report on their area of expertise to be distorted, incorrect and uncomprehending.

However, when that person perceives media contributions on another topic, this impression disappears: the trust in journalistic work is restored (hence 'Amnesia').

Apparently, this ‘effect’ was first described by writer Michael Crichton in a 2002 speech, referring to his friend and physicist Murray Gell-Mann:

"... When it comes to the media, we believe against evidence that it is probably worth our time to read other parts of the paper. When, in fact, it almost certainly isn't. The only possible explanation for our behavior is amnesia."

To elaborate:

Most people judge a statement by their lived experience; otherwise, they rely on the institutional narrative for events and persons they know nothing or little about.

There, they trust and rely blindly on sources they cannot control (though they could check some of it - but they are too confined, or resourceless, or indeed, lazy, or 'economical', as in 'not worth the time' to do so; this may appear to have to do with having already invested a definite amount of time and resources and not being willing to spend more, i. e. to go beyond that original allotment).

So, they may read or hear some falsehoods on something they know something about, and (of course) instantly refute it.

But then they go on to read (or listen) to the next article about something they know little or nothing about, and instinctively believe every word of it - in the very same newspaper or podcast that they have just caught conveying a falsehood - instead of instinctively being mistrustful due to the former negative experience.

And all of this despite the fact that they should infer, from their own reaction, that any expert, on any subject beyond their own expertise, will most probably have a similar reaction of disbelief and critique.

And thus they trust a proven liar - perhaps chalking it up to being a one-off "mistake" in a benign form of hubris - after all, how can anyone else be an expert on their own field of expertise?

The other areas are the easy ones - no need for any expertise in those!And they go on to do this day after day after day - in fact, they go on doing this even when the assumed narrative ITSELF turns out to be wrong.

They do not learn.

That is why it is called "Amnesia".

Addendum:

There seems to be a wide-spread special variant of this illness, in which you forget everything *you yourself* have believed, said or done on any given subject - 'Politician's Amnesia' perhaps - however, the latter is not as dangerous as the former, as it afflicts more people.

Edit:

As someone has pointed out, the Gell-Mann-Amnesia effect does not only work in space, but in time as well:

Though it is well accepted that certain entities, institutions and individuals may have done nefarious deeds in the past, all the while denying the fact that they were doing so, it is generally believed that they - or their successors - are, under no circumstances, still doing so today - again, simply because they are saying so.

The same person also pointed out that this - and with it the whole Gell-Mann-Amnesia effect - is due not so much to an inability to infer or deduce from one to the other, but from the unwillingness to do so, out of pure convenience, or, to put it more sharply once more, laziness.

Cognitive dissonance and discrepancies cause instant mental pain and agitation, and use up energy; while ignoring such reality leaves the illusion of being still one step away from even more pain and agitation, in the hope that the problem may simply go away, or at least that the chalice may pass by me as an individual, conserving my energy to deal with the consequences, as they then turn up, in the further hope and assumption that some at least will have dissolved on their own by then, or through the interventions of others; in essence a pass- the- buck attitude.

In one sentence:

In your private life, you shirk the company of anyone who has, over any amount of time, lied to you more than once or twice - but in the public arena, media outlets can demonstrably lie to you three times or more in a year, a month, a week or even on a daily newsflash basis - and you will never draw any consequences from it.

It's the equivalent of throwing good money after bad money. Despite "trust" being 'the most sought after currency in the world', people will go on trusting the media about everything they know nothing about - even though they know these are wrong on everything they actually think they do know something about - and / or have been proven wrong on so many occasions in the past on either subject.

Which, at least, is illogical and inconsequential.

And they will continue to do so even to their own personal disadvantage (though they may themselves be trying to disadvantage other people).

And so they "take more poisoned fruit from the poisonous tree that tried to poison them in the first place". (Mercouris / Barnes)

Assessment:

This does not mean liars will not tell the truth, or that truthful people will not lie.

No-one is always right, and no-one is always wrong.

But you don't need the 100% to be on the safer side.

All you need is a 51% to 49% ratio of someone being right, on anything, but especially the subject in question, as opposed to a 51 to 49 ratio of being demonstrably wrong; let alone a 80 to 20 per cent ratio or even higher, of being right - or wrong.

And as the Gell-Mann-Amnesia effect shows, ONE demonstrably false narrative in itself should set off all the alarms.

Conclusio in Perverso

This is how an irrational behavior (trust / distrust) becomes a rationally verifiable one, forced upon you by the impossibility in principle of knowing that all of your assumptions are right (for, like the wildebeest and their intestinal amoebae, you cannot know anything beyond the boundaries of your knowledge, which you can not even be aware of either way, as this would necessitate knowledge beyond the boundaries of your knowledge):

If you have no way of knowing if a statement that you rest your decision upon is valid (i. e., truthful and therefore trustworthy), do you believe a source that has, up to now, a history of having been significantly often (as ‘in the majority of cases’) right, or one that has a similar history of being wrong, in the circumstances that you choose?

Of course, this is no guarantee. You cannot be SURE.

But trustworthiness (and indeed its opposite!), if tied to reality, is subject to the laws of economics, not those of chance.

Relying on someone's truthfulness is not a game of Roulette, where people, after having repeatedly bet on the losing side, wrongfully hope to regain their losses by doubling their bet on that same source because "it has to" come up with it being "right" sometime.

Even if it does, the resources thus gained will be outspent by the resources lost in achieving that gain.

We are back to religion once again

Is this ‘Gell-Mann-Amnesia effect’ an expression of some scientific 'brain damage' or mental breakdown? Or, rather, one of laziness, or shall we say economics of emotion - "I have to trust someone to tell me the truth"?

Yes, indeed; it is, once again, a question of faith.

It is another expulsion from the Paradise of Innocence, to which it gives a whole new interpretation: it is called "selling your soul to the devil" - as a religious adherence to that specific source at hand, for the interpretation of things that one knows nothing about (and perhaps even choosing to know nothing about, as it may disturb one's knowledge of the things that one thinks one really does know something about), a trust in an authority that its representatives have assumed simply by the recipients having allotted it to them.

And while this might be economic, the disadvantage, of course, comes when reality or truth sets in: ruining your own reputation as a currency of trust - or 'losing your soul'.

To note:

If you get your religion - i. e. your frame of reference - wrong, you are sunk. It's the bedrock, the foundation; that which always will be. That which is not questioned. Religion is the belief in the ultimate rightness of things.

So, if societal betrayal becomes personal, (betrayed) trust becomes a faith to minimize the hurt - which turns it into a (new) religion.

Beware.